Replication and reproduction of research findings are crucial for scientific progress. These processes allow the scientific community to assess the reliability of research outcomes, fostering a self-correcting mechanism and informing policy decisions.

A new IZA discussion paper by Abel Brodeur and over 350 coauthors investigates the reproducibility of findings in leading economics and political science journals (2022-onwards). The study computationally reproduces and conducts sensitivity analyses for 110 articles. Small teams of “replicators” – PhD students, postdoctoral fellows, faculty, and researchers with PhDs – collaborated on analyzing individual studies. Each team computationally reproduced the results, identified coding errors, conducted sensitivity analysis, and wrote a report shared with the original authors.

High rate of reproducible results, but many coding errors

The study finds a high rate (over 85%) of fully computationally reproducible results. This means the provided code by the original authors can be run and produce the same numerical results reported in their articles. This is likely due to data and code availability policies enforced by the journals, which often include a data editor.

However, having fully reproducible results does not guarantee the absence of coding errors. Excluding minor issues, the study identified coding errors in about 25% of studies. While not all errors affect the final conclusions, some major ones were found, including a large number of duplicated observations, incomplete interaction in difference-in-differences regressions, miscoding of the treatment variable, and model misspecification.

Are the results robust?

Replicators conducted 5,511 re-analyses, involving changes to the weighting scheme, control variables, estimation methods, or using new data.

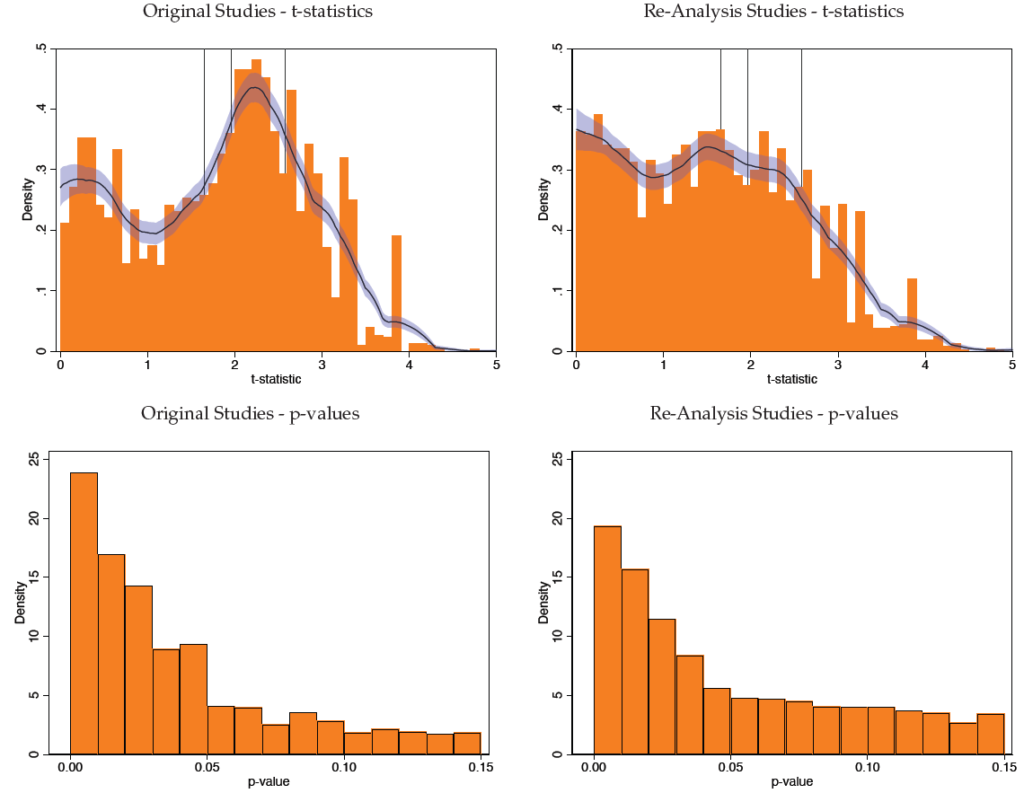

A visual analysis of the test statistics distribution (see figure below) shows a large shift in the mass of test statistics from the just statistically significant at the 5% level region to the statistically insignificant and 10% significance regions after re-analysis. This suggests re-analyses decrease the statistical significance of many originally published test statistics.

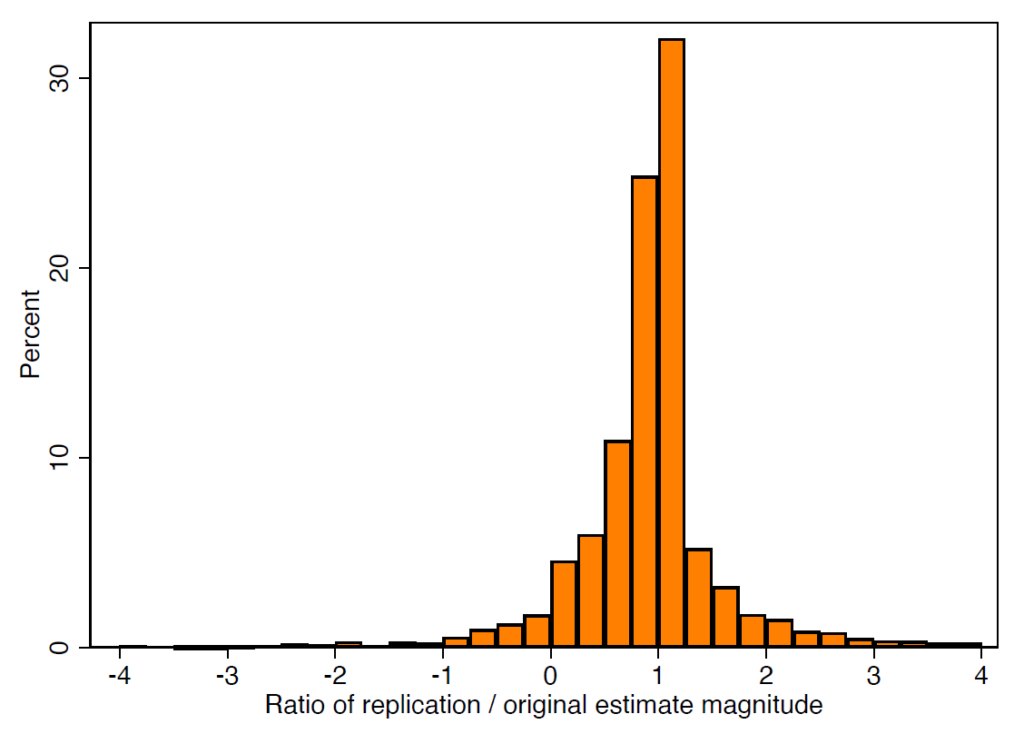

In terms of relative effect size, most re-analyses cluster around one, with about 17% being smaller or equal to 0.5. Around half report a ratio greater than one, suggesting potential conservatism from the original authors.

The study defines robustness reproducibility as having the same sign and remaining significant at the 5% level after re-analyses. Using this definition, a robustness reproducibility of about 70% is found. Additionally, half of original point estimates significant at the 10% level (but insignificant at the 5% level) become statistically insignificant at the 10% threshold with the replicators’ robustness checks. For original estimates significant at the 5% level (but insignificant at the 1% level), over a quarter of re-analyses become insignificant at the 10% threshold.

Which robustness checks matter more?

The study’s robustness checks can be broadly categorized into eight groups. Robustness reproducibility rates were found to be lower when replicators changed the dependent variable (45%) and the sample (64%), while introducing new data yielded the highest rates (87%). The remaining categories, including changing control variables, estimation methods, inference methods, main independent variable, or weighting scheme, offered robustness rates around 75%.

Barriers to reproducibility

The lack of raw data significantly restricted replicators’ abilities across all analysis categories. Raw data limitations hindered robustness checks for 19% of teams and re-coding key variables for 18%. Additionally, 12% and 13% of teams believed the lack of raw data impeded their ability to perform replications and extensions, respectively. Furthermore, 7% of teams felt the original paper was unclear to the point of hindering robustness checks.

Conclusion

The large scale of this ongoing project has the potential to influence research norms and researcher behavior by promoting more rigorous methodologies and discouraging questionable research practices.

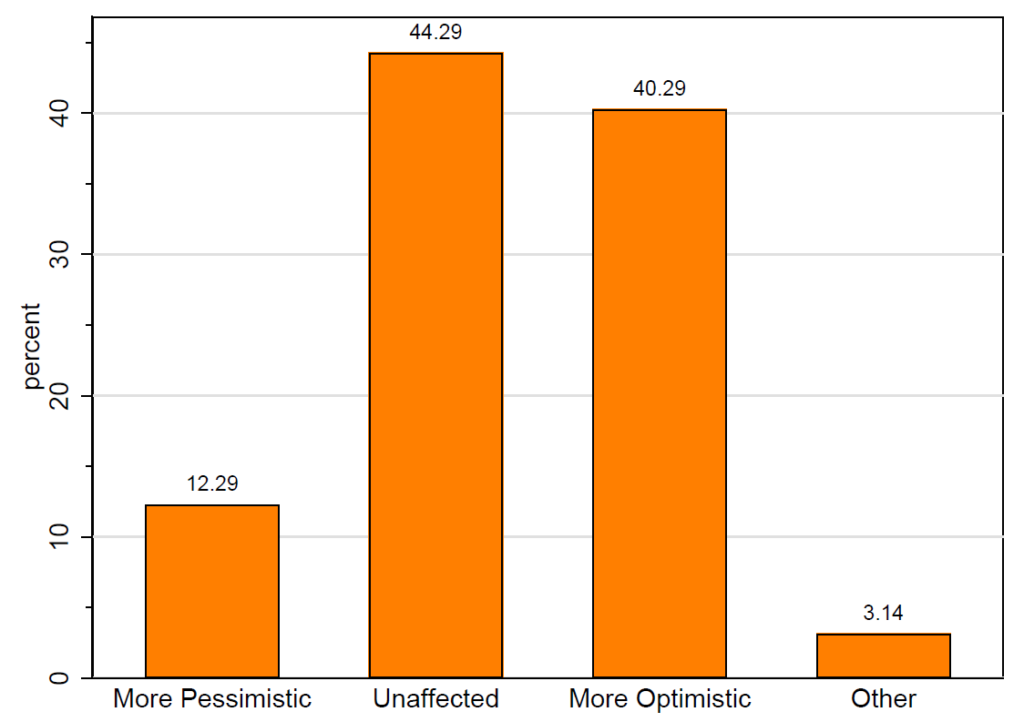

On a positive note, over 40% of replicators reported a more optimistic view of the discipline due to the quality of the replication packages they reproduced.