Most academic disciplines use a statistical threshold to decide whether a hypothesis is likely to be true. If a test statistic is below this threshold, the finding is too uncertain to be suggested to be true. An unintended consequence of having thresholds is that researchers know the conventional statistical threshold and consider it as a stumbling block for their ideas to be considered since positive findings are more likely to be published.

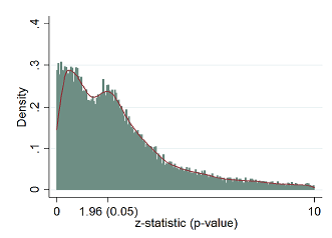

Abel Brodeur, Mathias Lé, Marc Sangnier, and Yanos Zylberberg collected the value of tests statistics published in three of the most prestigious economic journals over the period 2005-2011. The distribution of test scores shows a hole just before the threshold, that is in the region where results are too uncertain to be called true, and a surplus after it.

This finding suggests that researchers may be tempted to present tests with higher statistics in order to increase their chances of being published. For example, imagine that there are three types of results: green lights are findings which are very likely to be true. Red lights are findings where effects are too uncertain (or too small) to be considered. Amber lights are in-between. The paper argues that researchers would mainly paint amber lights green, rather than in the initially red and green cases. According to the authors’ calculations ten to twenty percent of tests published are misallocated.

The following graph shows the distribution of test scores where a z-statistic of 1.96, which normally corresponds to a p-value of 0.05, is the conventional threshold used in economics. Tests with z-statistics lower than 1.96 are usually regarded as too unconvincing. The picture shows the hole in the distribution just below the conventional threshold and the excess mass above, which hints at a systematic misallocation.

The study also identifies several papers’ and authors’ characteristics that seem to be related to this misallocation, such as being a young researcher in a tenure-track job. There is no indication of misallocation of tests for randomized control trials. Surprisingly, data and code availability do not seem to be associated with substantially less misallocation.

Results presented in this paper may have interesting implications for the academic community. Even though it is unclear whether these biases should be larger or smaller in other journals and disciplines it raises questions about the importance given to values of tests and the consequences for a discipline to ignore negative results. Suggestions have already been made in order to reduce this trend. Journals have been launched with the ambition of giving a place where authors may publish negative findings. There is also more and more pressure for researchers to submit their methodology before doing an experiment.