In academia, researchers use statistical thresholds to decide whether a hypothesis is likely to be true. An unintended consequence of statistical thresholds is that they may lead to p-hacking. The term p-hacking refers to research choices being made in such a way as to artificially inflate statistical significance. Approaches to p-hacking can take various forms, including in the way data is cleaned, variables defined, and specifications chosen.

Concerns about the propensity for p-hacking to undermine research credibility in recent years have led to increased interest in pre-analysis plan and pre-registration, among other tools (see a new IZA discussion paper for more on this).

In another new IZA discussion paper, Abel Brodeur, Nikolai Cook, and Anthony Heyes investigate the extent of p-hacking for studies using the online platform Amazon Mechanical Turk (MTurk). This platform has seen an unequaled increase in use in economics and management research over the past decade, partly due to giving researchers the ability to build large samples at low cost. However, in parallel with the growth in use of MTurk has come a growing suspicion in some research communities about the reliability of results from studies using it.

Substantial p-hacking and publication bias found

The paper provides the first systematic investigation of the statistical practices of the research community itself when using MTurk, and the extent to which those practices render MTurk-based empirical results untrustworthy. The practices under study are those that have become focal in recent assessments of research credibility elsewhere, namely (1) p-hacking, (2) publication bias (or selective publication), and (3) the presentation of results from plausibly under-powered samples.

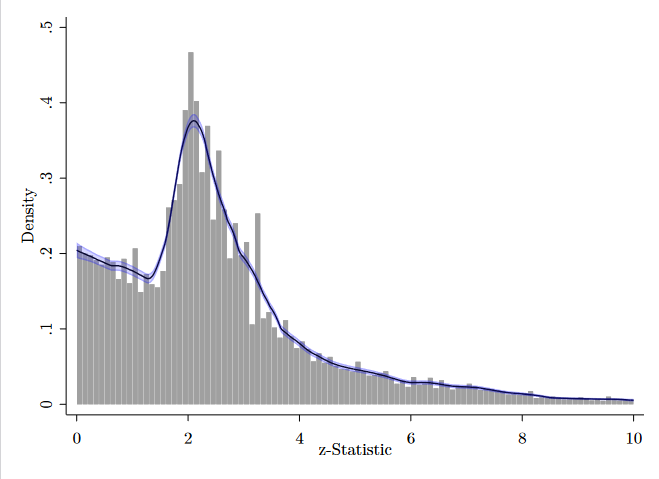

The authors analyze the universe of hypothesis tests from MTurk papers published in all journals categorized as either 4 or 4* in the 2018 edition of the Association of Business School’s Academic Journal Guide between 2010 and 2020, around 23,000 in total. Their findings suggest that the distribution of test statistics (see figure below) from MTurk exhibits patterns consistent with the presence of considerable p-hacking and publication bias.

It exhibits a pronounced global and local maximum around a z-statistic value of 1.96, corresponding to the widely accepted threshold required for statistical significance at the 5% level, or a p-value of 0.05. This maximum is coupled with a shift of mass away from the marginally statistically insignificant interval, indicative of p-hacking.

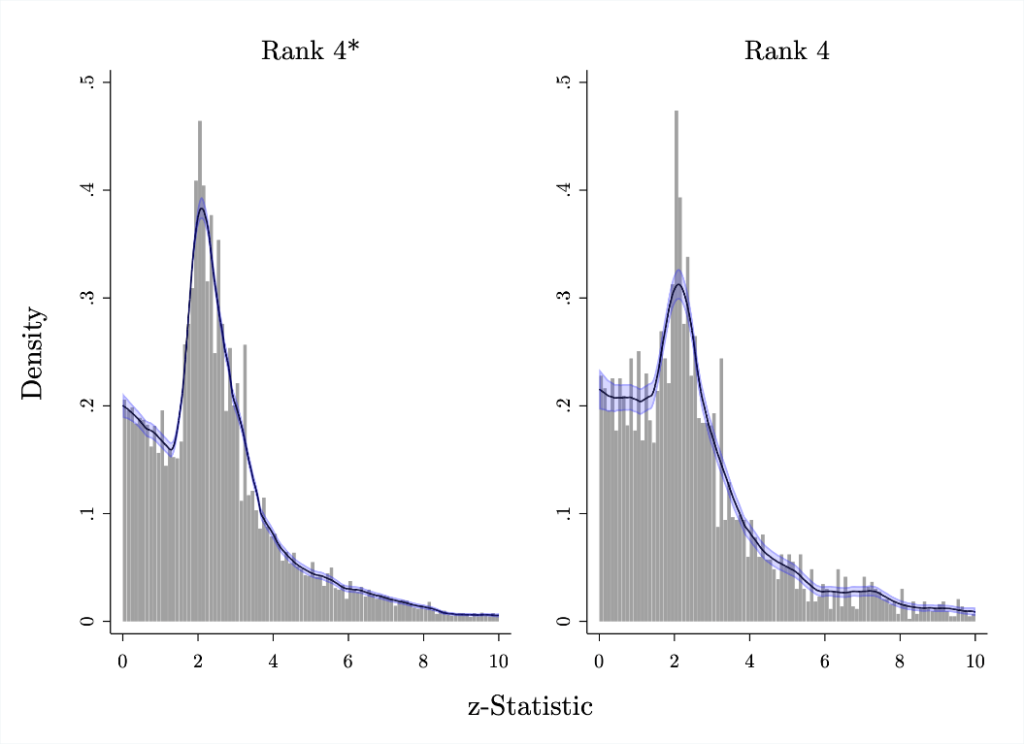

This pattern of test statistics is persistent over time and roughly as present in papers published in elite (rank 4*) and top (rank 4) journals.

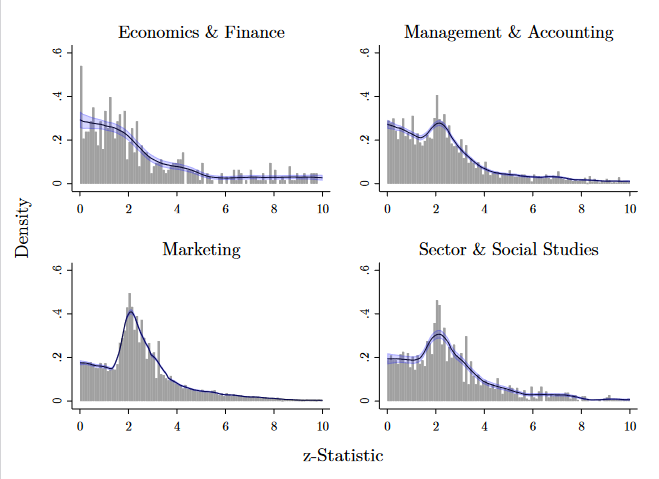

The extent of the problem varies across the business, economics, management and marketing research fields (with marketing especially afflicted).

Underpowered studies with small sample sizes

The power of a statistical test is the probability of detecting an effect (rejecting the null hypothesis of no effect) if a true effect is present to detect. The appropriate choice of sample size, and therefore level of power, is a central element of experimental research design.

However, most MTurk studies use small sample sizes (with a median number of 249 subjects per experiment) without giving justification as to how a particular sample size was chosen. Cost does not seem to be the reason as the average cost of an additional data point (in the analyzed sample of studies) is 1.30 USD – and less than 1 USD in 70 percent of cases.

More rigorous attention to statistical practice needed

The authors describe their findings as “in one sense pessimistic and in another optimistic.” On the one hand, they find the credibility of results contained in the existing corpus of research using the MTurk platform to be substantially compromised: “If a reader were to pick at random a study from our sample, our analysis points to this result being unlikely to be replicable.”

On the other hand, the flaws they identify relate to the way in which MTurk experiments are conducted, and results selected for publication, by the research community, rather than flaws inherent to the platform itself. This distinction is important as it suggests that there is no reason – at least from this perspective – for researchers to discontinue to use MTurk and other similar platforms.

Instead, the authors call for more rigorous attention to statistical practice. In particular, the use of larger samples to provide appropriately powered experiments should become more common – which seems feasible, given that this is an area of research where data points can be purchased on the cheap.